The system utilized a single sensor, the Stereolabs ZED stereo camera system. This system provides a color image and is capable of delivering a point cloud up to 25 meters. Given the constraints of maximum vehicle speed and the desired response time, this was sufficient for the task. By using a single sensor, the setup was kept very simple as there was no cross-calibration or synchronization required. The sensor also performed very well, returning an impressively dense point cloud at 1080p and 30fps.

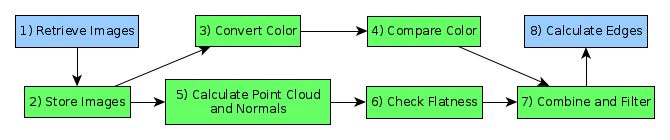

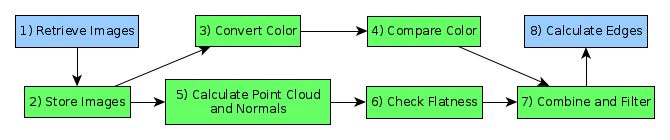

As there was no need to accumulate data due to the stereo camera's fairly dense point cloud, the system operated one-shot. This greatly simplifies system documentation like the operating diagram shown below. CPU compute is shown in blue and GPU compute is shown in green.

This whole system operated at 30fps with 150ms latency from input frame to extracted edges. The system ran on a laptop with an Intel i7-6820HQ and Nvidia M1000M (2GB) using Ubuntu 16.04 LTS. The laptop showed no more than 25% CPU usage while simultaneously recording data to file and displaying the input and final processed images in real time. The cooling fan did not noticeably increase above idle, seemingly implying that the graphics compute load was similarly low for its capabilities.

As with any proof of concept, there's a lot to change for the prototype. Since this was pretty much just a pair of cameras and a computer, practically all the complexity is in the computation. There are two haves to the computation though, the image computation as performed by the camera's API and the edge extraction.

The camera produced a surprisingly dense point cloud. Having worked on and with stereo camera vision systems in the past and being somewhat familiar with the state of the art, its performance was impressive and intriguing. While performing some testing indoors, a hint as to how was provided when some strange behavior regarding depth readings on repetitive fabric patterns occurred. On a particular blue an white chevron pattern on a pillow from 10 feet, the camera placed the white part of the pillow at the correct distance, but placed the blue part another 5 feet further.

After some further investigation, it appears the depth measurement was performed using a neural engine. This also explained some of the results where inconsistent depth readings occurred over a fairly uniform but highly textured surface. So while the depth readings were dense, they were not always accurate. A stunning example of this is below where the sampled space contained some erroneous surface normals leading the system to believe the sides of a hill and a wooded area to be flat (the area encircled in red).

Due to the design of the system to also detect color differences, however, the system is able to largely differentiate the road surface from the non-road areas.

Unfortunately, the neural engine meant there was no explainable vision pipeline, though this requirement may change in the future. Attempting to use more traditional, explainable methods did not yield the density or range required for the required vehicle speed. From the experiments performed, it seems unlikely any passive camera system can handle the low texture of fresh snow or fresh asphalt. This seems to imply that for the foreseeable future an active ranging system will be required to get any level of range and reliability to distance measurements. With that in mind, work began on the next iteration.